It’s possible that I have a kind of childhood-goalkeeping-related risk-aversion OCD. I email myself at 2am so I don’t forget things I need to do in the morning, count the number of cars at traffic lights, and look behind me for attackers creeping up on the back post.

I’m also a broad-spectrum sports head and an intermittent maths nerd, so I compose compulsive critiques of any sports media which mistakenly thinks it can add up. I spend a lot of my time reading sports articles filled with statistics, which means I spend a lot of time feeling vaguely annoyed – but at the same time, ever-hopeful that I’m about to read the kernel of the next Moneyball. The right data, competently used, can help to show who performs sporting skills well – but in football the right data, or even any data, is thin on the ground (though growing thicker, very slowly).

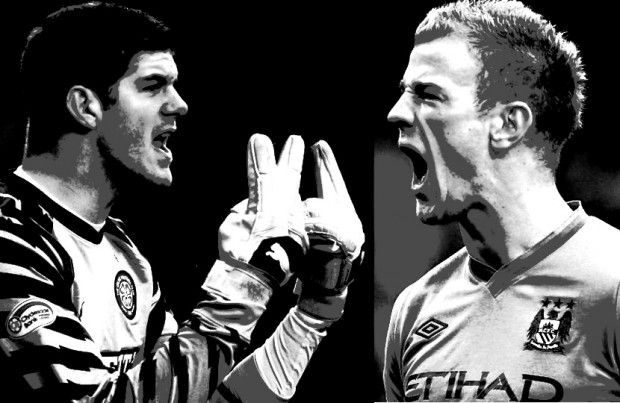

So when the headline “Joe Hart vs. Fraser Forster – A Comparison” popped up in my Google, nestled in the Bloomberg sports stats-y section, I admit to a tiny piquing of my interest – could some science be behind England’s goalkeeping selection?

My optimism lasted until I encountered the first chunk of rock pretending to be a scalpel:

Is this the comparison? Oh dear. But I had started reading, and the compulsive critique had started to form.

Firstly, the helpful interface makes the table sortable. One of the columns contains 1, 1, 1, 1 and 2, the other 0, 0, 1, 3 and 4. If you can’t sort this table in your head, you are not qualified to write – or perhaps even read – the article.

Secondly, of all the things that you could choose to measure about a goalkeeper, they’ve gone with “errors leading to shots/goals”? The selection of this data makes me react like Stephen Fry, 20 seconds into this video.

Here’s just a few things a goalkeeper can do without creating an entry in this table:

- Drop a sitter of a cross into a crowded goal area, where it’s immediately cleared by an alert defender (no resulting shot, no resulting goal).

- Misjudge a simple ball outside the penalty area, get called for handball and red-carded. Free kick is crossed and headed away (no resulting shot, no resulting goal).

- Be Gianluca Pagliuca, and almost fumble the 1994 World Cup away (no resulting shot, no resulting goal).

This type of failure in statistical analysis is failing to define your hypothesis. It sounds very science-y, but it just means, in this example: you’re saying that whoever makes the fewest shot-causing plus goal-causing errors is the best goalkeeper. Right?

As youngsters say these days: yeah, nah.

Assuming that it was a good hypothesis, at a minimum you’d want to work out how frequently these errors happen as a percentage rather than a raw score, otherwise it’s tilted against whichever goalkeeper is blessed with teammates who let him see a little more of the action. But it’s difficult to define how often a goalkeeper has an “opportunity” to make an error – just about any time he makes a move at the ball, presumably, including when he ultimately chooses not to go after it. Once you’ve done that, all you need is some objective way of determining what an “error” is – a panel of expert judges, grading independently, should do the trick (baseball manages this, in less challenging circumstances). Finally, you need to calculate the level of confidence you have in your results – someone may have better results, but how sure can you be that they’re superior?

Bloomberg spares us all this boring-but-useful analysis, and skips ahead. The authors don’t claim that just one statistic can carry the whole load – and now I’ve read one, I can’t not read the others.

It starts with something even more impressive-looking: a bar chart.

In recent UCL play, Fraser Forster has averaged 3.90 saves per match: 1.88 from inside the box, and 1.88 from outside the box. The remaining 0.14 saves per match are, presumably, from balls parked precisely on the chalk at the edge of the penalty box. Joe Hart’s inside/outside saves, meanwhile, leave him in search of a further 1.1 saves per match. Shots from the stands? From another dimension? No source is provided for the figures, so we may never know. But the article does note that UCL “qualifying stage data [is] unavailable” – so if you’re ever looking at a stats website, and you see UCL qualifying stage data, you’re in the wrong place.

The chart produces some chin-scratching analysis from the authors, including a note on sample sizes: “this can cause issues”. Well, yes, it can – but only if you need a sortable table for a column of data with four ones in it. Absorb this chunk of first-year uni binomial theory and you can use a wide range of tests to work out how serious your sample size problem is.

Regardless of whether Hart and Forster can be separated on this front, here’s a thought about all of this shot/save business: positioning is, as far as I remember, still a goalkeeping skill. One benefit of being a well-positioned, athletic goalkeeper is that strikers might feel that, in order to have a chance of scoring, they have to aim for the extreme corners of the goal. Mightn’t this result in more than a handful of shots which miss, and therefore don’t find their way into this impressive and colourful chart? I sense this is another failure of the implied hypothesis – save a higher proportion of shots, from inside/outside the box, and you’re a better keeper. Really?

And then comes the final insult: “[M]any would argue that Forster has a weaker defence in front of him, with goals conceded inside the box likely to be from areas that left Celtic’s number 1 with little chance to make a save.” This is shorthand for: “There are factors which affect these figures. But we don’t know how, because we can’t quantify the impacts.” Then what are all your numbers for?

I’ve barely finished that anguished question, when I’m reminded of how the sentence started: “Many would argue…” And when they mount these arguments, would they call upon any figures? Because that would, unlike the Hart-Forster comparison, make for some interesting reading. Two pages, and we’re no closer to knowing anything. If Forster or Hart fumbled the ball this badly, would either of them be up for England selection?

It might appear that, with Hart/Forster/numbers lying hacked to pieces on the floor, I’m having fun doing this – but actually I’m quite depressed. An article with some competently collected data, and a thoughtfully-constructed hypothesis, intelligently tested, would have been a compelling read.

How about a test of the “weak Celtic defence” hypothesis? How often is Forster left one-on-one in the penalty area? Or with one defender, one attacker? Two attackers, one defender? Out-numbered defenders within thirty yards of goal? Free headers inside eight yards? [Insert your own examples here.] How can these numbers be accumulated? How can they be made relative, rather than absolute – perhaps using the measuring stick of opponents’ performances against other opponents? How can we create robust definitions to accommodate the Pagliuca-fumbles-onto-the-post components, and to de-emphasise poor defensive positioning when a trailing team is chasing a game late?

I imagine that to Bloomberg, that all sounds very difficult and time-consuming. Sorry folks, real maths is. But for those of us who do crave something mathematically substantial to read about the game that they love, there are some hard facts we need to face. Football has no tradition of collecting statistics, and those that are kept include some that have been historically ill-defined (goal or own goal/save or intercepted cross) and others, until recently, ignored (accurate passes, possessions in opposition penalty area… almost anything).[i]

Therefore, anyone motivated enough to spend time seeking new discoveries about the game will need to start by amassing large quantities of data – which, if it has been kept, is also being kept secret – so that means starting from scratch. That lengthens the process by orders of magnitude, and narrows the likely field of researchers to somewhere near zero – the dedicated few who sacrifice a lot of time to tease out the beginnings of some interesting research.

The few organisations with the resources to regularly compile data include big media companies, whose main interest is in producing puddle-shallow clickbait; big consulting companies, whose discoveries will be shared only with the highest bidder; or the clubs themselves, who are unlikely to want to share with anyone.

Sadly, it seems that the most likely outcome is more dumb articles, and more angry reading. But if anyone wants to start crowdsourcing football data collection and put that sort of writing out of business – well, that would just completely take advantage of my compulsion to watch sport and count things.

I want to find out if Celtic’s defence is as weak as many would argue. Anyone care to help?

Jack Martin is a Melbourne writer and founder of the little-read New Statsman blog. When he plays golf, his counting obsession really hurts.

____________________

[i] Side note: not surprising, really, for a sport which makes little pretence that it cares for precision, even in such fundamental things as the size of the field, or the length of the game.

The first statement is redundant, unless your planned field is an illegal 100-yard x 100-yard square.Make the touchline 100 yards + one blade of grass, and you’re all set.

Allow for time lost for any cause. Or not. Over to your discretion, Mr Ref.

Nice one, perhaps this might appease: http://www.theguardian.com/football/2014/feb/05/the-knowledge-jimmy-hill-two-points